Scientists from the University of Darmstadt have tested whether it is possible to teach artificial intelligence to distinguish the right actions from wrong ones and to navigate the system of moral values. The results of the study are cited by Tech Xplore.

Experimental AI was called the “Machine of Moral Choice.” The algorithm was fed by hundreds of books written over the past 500 years, religious texts, constitution and news, both modern and 30 years ago.

Texts of different eras perfectly reflect the ideas that permeated a given era. For example, notes from the late 1980s and early 1990s glorify marriage and childbearing, but the news of 2008-2009 highlights careers and education.

READ THE “ANGEL NUMBER” TEST WILL SHOW WHAT AWAITS YOU IN THE NEAR FUTURE

AI had to understand what actions were encouraged by society and which were considered immoral. The scientists asked the algorithm to rank phrases with the word “kill” in order from neutral to negative. The result is a chain: kill time, kill the villain, kill the mosquito, kill, kill a man.

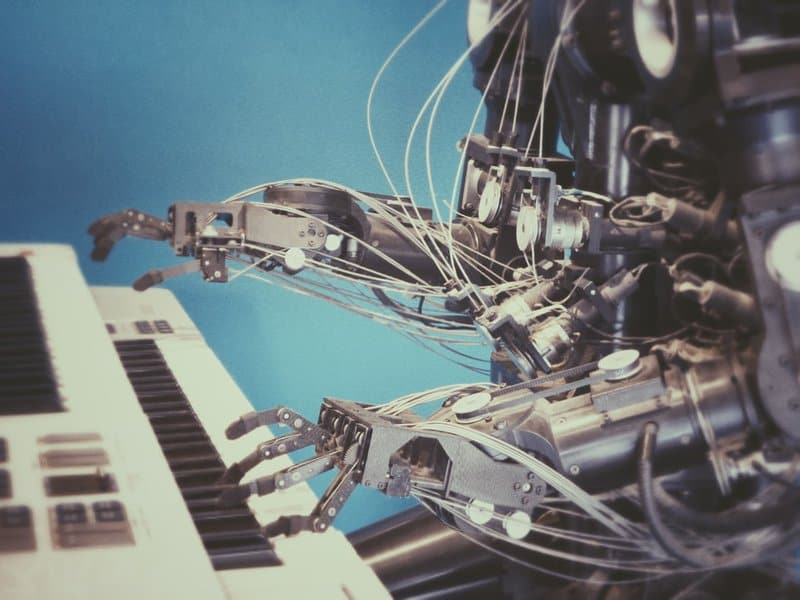

unsplash.com

Scientists were pleased with the result of the experiment – AI as a whole learned to distinguish bad deeds from good ones. But there were also problems – two negatively colored words nearby could introduce the algorithm into a stupor. For example, he referred to the phrase “torture prisoners” as neutral, although he had previously determined that “torturing people” was unequivocally bad.

“Artificial intelligence solves increasingly complex problems, from self-driving cars to healthcare. It’s important that we trust the decisions it makes,” the researchers say.

Featured image on unsplash.com

READ MORE MARGARITA COCKTAIL RECIPE-SIMPLICITY THAT HAS WON THE WORLD

READ ALSO GORGEOUS CLAUDIA SCHIFFER IS NOT AFRAID OF AGING. WHAT A SUPERMODEL LOOKS LIKE AT 49